Tips

- Use git worktrees

- Use ast-grep

- Turn on isolatedDeclarations in TSConfig

- “With isolated declarations, each file’s type information is self-contained and doesn’t require analyzing its dependencies. This means AI tools can understand a file’s types instantly without traversing the entire dependency graph, leading to much faster code analysis and suggestions.” (🤖)

- Use Gemini CLI to construct plans

- “Claude Code pro tip: Ask it to use Gemini CLI with its 1M context window and free plan in non-interactive mode to research your codebase, find bugs, and build a plan for Claude to action.” (X)

- “Chat priming”

- Use the Playwright browser automation MCP (X)

claude mcp add playwright npx '@playwright/mcp@latest'- Or use Browser MCP

- Describe goals along with tasks.

- “Many times I find I get the best results by describing my goals instead of a specific task. The LLM can infer which tasks it needs to complete to achieve the goal.” (X)

- Tell the LLM, “Correct me if I’m wrong”, to combat its sycophantic tendencies.

- Track Claude Code usage using a tool like Iamshankhadeep/ccseva or Maciek-roboblog/Claude-Code-Usage-Monitor

- Use Wispr Flow for dictation

- Or superwhisper

- Use MacWhisper with Parakeet v2. It can “diarise a 30 minute podcast in under 8 seconds.” (Reddit)

- Useful for transcribing meetings.

- Use the Context7 MCP server (explanation)

- Use

/clear- cf. context rot: “a problem where long, irrelevant contexts degrade LLM performance.” (🤖)

Low-hanging fruit

- Modernizing tests

- Expanding test coverage

Disciplines

- Resist the urge to code.

- Resist the urge to type.

- Resist the urge to monitor.

Memorable quotes

- “Cook or be cooked.” (Unknown)

- “The value of 90% of my skills just dropped to $0. The leverage for the remaining 10% went up 1000x. I need to recalibrate.” (Kent Back)

- “The reason everything will not change quickly, even if AI generally exceeds human abilities across fields, is, in large part, the nature of systems. Organizational and societal change is much slower than technological change, even when the incentives to change quickly are there.” (Ethan Mollick)

- “We already don’t read compiled output today. Soon we may stop reading the LLMs code output, and merely validate results via tests.” (Cory House)

- “The best programmers in the future won’t be those who can code fast – it will be who can code the most in parallel.” (Ben Vinegar)

- “The race [is on] for LLM ‘cognitive core’ – a few billion param model that maximally sacrifices encyclopedic knowledge for capability. It lives always-on and by default on every computer as the kernel of LLM personal computing.” (Andrej Karpathy)

- “If you’re writing any variety of normal/standard code and AI isn’t writing 90-95% of it you’re probably building suboptimally.” (Austin Allred)

- “LLM is the brain, and MCP is the hands and feet.” (Wang Cheng)

- “Structuring your code for maximum parallelization is key.” (Matt Pocock)

- “Software will become so niche that we’ll have 10x the companies with 1/10 the employees.” (Dave Fano)

- “The people getting the most out of AI Agents right now are creating hyper precise prompts to describe what they want, and embracing the fact that their job is to review and edit the output. The moment you stop expecting perfection, your leverage goes up massively.” (Aaron Levie)

- “We are witnessing the end of IDEs.” (Andrew Denta)

- “Writing is not a second thing that happens after thinking. The act of writing is an act of thinking. Writing is thinking. Students, academics, and anyone else who outsources their writing to LLMs will find their screens full of words and their minds emptied of thought.” (Derek Thopmson)

See also

- Software is changing again (YouTube), by Andrej Karpathy

- OpenCode – terminal-based AI assistant

- Jevons paradox – “when technological advancements make a resource more efficient to use (thereby reducing the amount needed for a single application); however, as the cost of using the resource drops, if the price is highly elastic, this results in overall demand increasing, causing total resource consumption to rise.”

- My AI Skeptic Friends Are All Nuts, by Thomas Ptacek

- Coding agents have crossed a chasm

- Claude Code AI Development Framework, by Peter Krueck

- claude-squad

- tidewave_rails

- Vibe Kanban

- Repomix – Pack your codebase into AI-friendly formats

- Why I’m Betting Against AI Agents in 2025 (Despite Building Them), by Utkarsh Kanwat

- models.dev

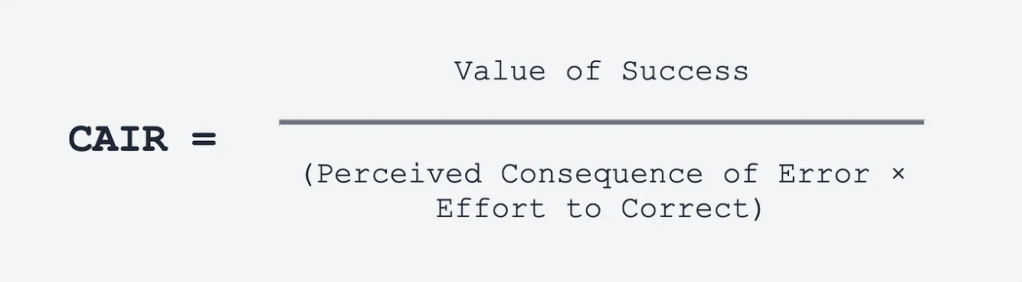

“CAIR” – Confidence in AI Results

The Hidden Metric That Determines AI Product Success, by Assaf Elovic

- “Value: The benefit users get when AI succeeds.”

- “Risk: The consequence if the AI makes an error.”

- “Correction: The effort required to fix AI mistakes.”

- “When CAIR is high, users embrace AI features enthusiastically. When CAIR is low, adoption stalls no matter how technically impressive your AI is.”

Five principles of CAIR optimization

- “Strategic human-in-the-loop (Optimizes all three variables)”

- “Reversibility (Reduces Correction)”

- “Consequence isolation (Reduces Risk)”

- “Transparency (Reduces Risk and Correction)”

- “Control gradients (Increases Value while managing Risk)”

- “Start with low risk features and progressively offer higher value capabilities as confidence builds.”